All lamps and luminaires come with two key specifications: The Correlated Color Temperature (CCT) and the Color Rendering Index (CRI). Both terms describe the output of a light source, and both have the word “color” in their name, but they actually refer to very different things.

What is CCT?

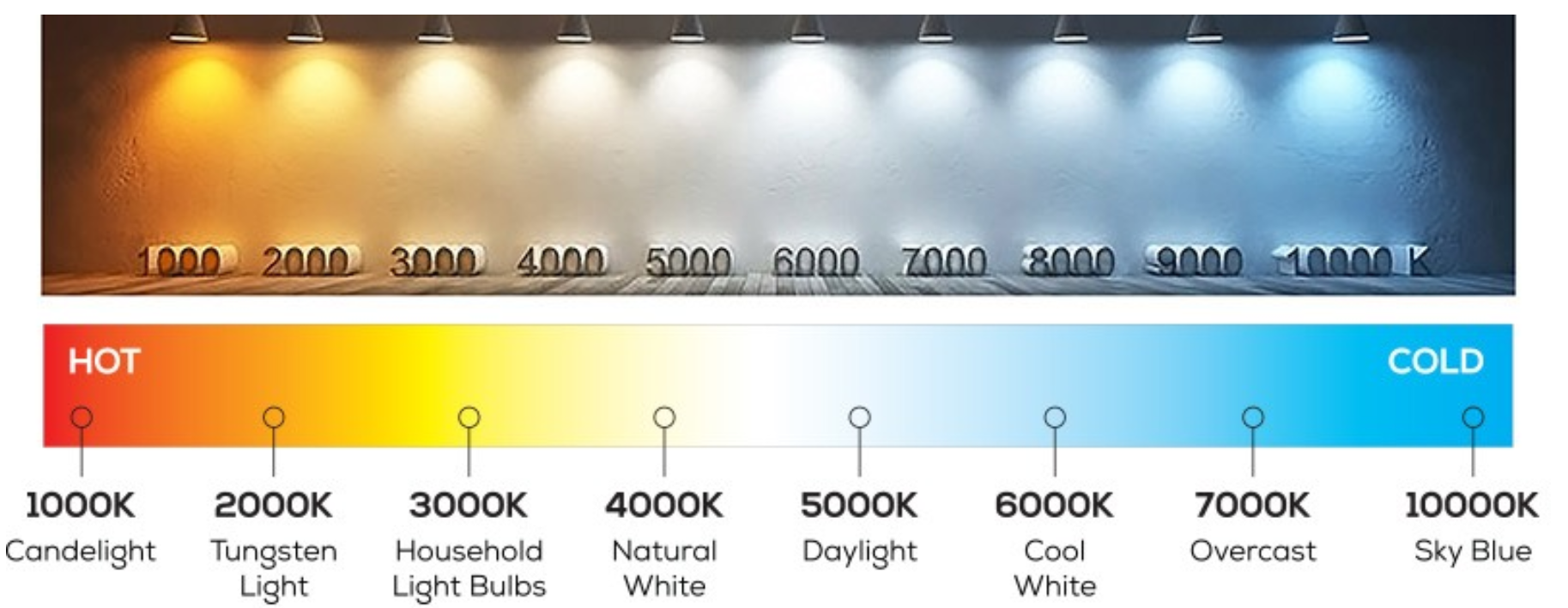

CCT is a number, measured in degrees Kelvin, which helps to describe how warm or how cool a light source is. The majority of light bulbs range from 2700K (warm, mimics the look of incandescent color) to 5000K and higher (bright, white daylight color like what’s portrayed outdoors on a sunny day).

When it comes to residential applications, 2700K and 3000K are popular color temperature options, since they both create a warm and inviting atmosphere, thus creating a more relaxing environment.

However, when it comes to retail and commercial applications, the preferred choice is the other way around. 4000K is very popular since it provides a cleaner, more crips shade of white. In task-oriented or industrial applications, 5000K or 6500K is preferred, since these color temperatures match natural daylight more closely.

When you hear the terms “warm” and “cool” to describe light, keep in mind they’re describing the color itself and not the CCT value. For example, orange is a warm color with a low CCT, while blue is a cool color with a high CCT.

Most lighting sources used in residential, commercial and industrial applications fall in the range from 2700K (slightly yellow) to 6500K (slightly blue). LED lighting for special applications also offers colors outside of this range, such as pure red, green and blue.

What is CRI?

What exactly is CRI? According to the Lighting Research Center, “CRI (color rendering index), is the measurement of a light source’s ability to show object colors most naturally or realistically when compared to a reference/natural light source.” Or, more simply, CRI is simply a way to identify how well a light source will preserve the natural colors of an object or environment.

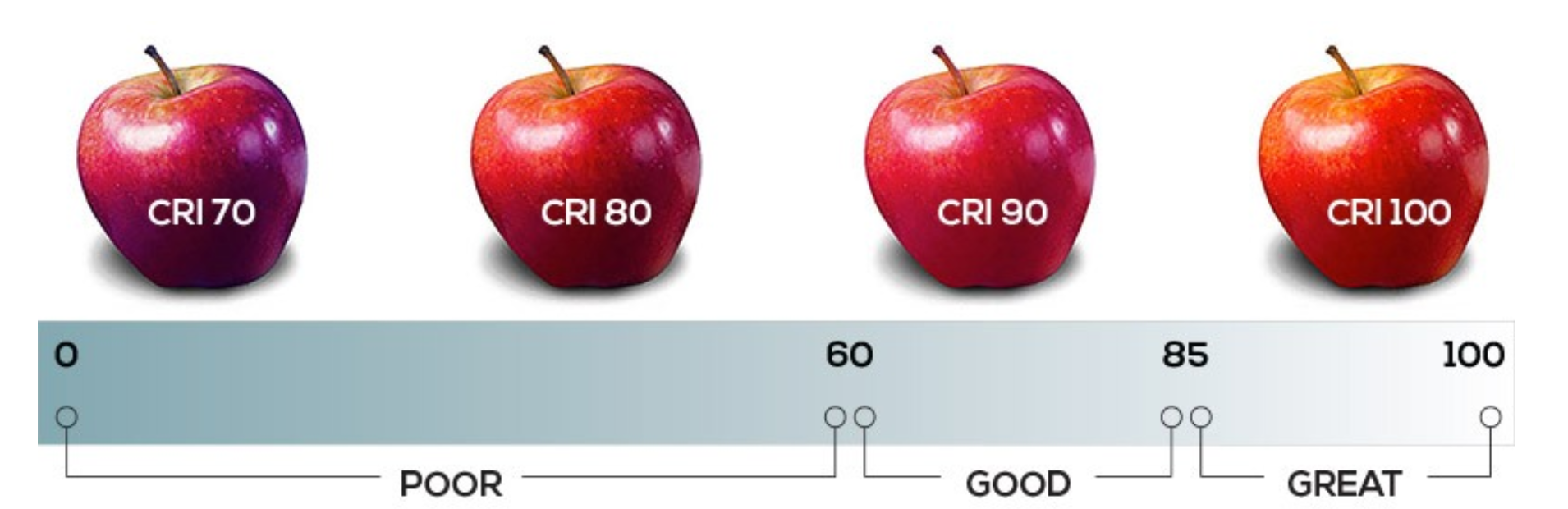

CRI is rated on a scale from 0 to 100 and only Sunlight is classified as having a CRI of 100. Colors look exactly like they should underneath a light scoring CRI of 100. So the simple concept: the higher the CRI, the better colors will look and if the lower the CRI, the worse colors will look.

Let’s use the CRI variation of the apples shown to the above as an example. All of the apples are shown under a 2700K light (similar to Inspired LED’s Warm White LEDs), with the lowest CRI depicted on the left, and the highest CRI on the right. The scale for CRI is rated 1-100, with 100 being the highest and most “natural” looking light source, and 1 being the furthest from natural coloring. Just take a look at the CRI listing under each apple. In comparison to the apple on the right, the one on the far left appears dull, discolored, and certainly not very appetizing! Gradually as the CRI increases the apple begins to look more realistic; the shades of red become more and more pronounced until the apple looks picture perfect!

The difference between CRI and CCT:

As explained above, CCT and CRI measure two different aspects of color. CCT tells us the color of the light emitted by the light bulb, and is immediately visible to the casual observer by looking directly at the light source.

On the other hand, the CRI value does not tell us the color of the light itself. Rather, it tells us about the color appearance of objects under the light source (the light source “renders” the colors of an object, hence the term). You cannot determine a light bulb’s CRI value by looking at the light itself. Instead, a light bulb’s CRI can only be estimated to the naked eye by looking at the colors of an object illuminated by the light bulb.

An illustrative example of this principle in action is when photographers and artists use a “color checker,” which uses a palette of standardized colors to estimate the color rendering quality. The only way to measure a light source’s CRI value is by using specialized spectral measurement devices. Lighting manufacturers rely on data from these devices to publish and guarantee color rendering related metrics.

CCT VS CRI: which is more important in lighting?

The explanation above should have made clear that CRI requires a color temperature value in order to determine what we are comparing color appearance against.

CRI is certainly an important metric that helps explain color quality, but it is almost meaningless when used alone without a color temperature value. Given a light bulb’s 95 CRI rating, you might be impressed and conclude that it must be very accurate. But accurate when compared to what? Incandescent bulb light color (2700K), natural sunlight (5000K) or natural daylight (6500K)?

Think first about the color temperature requirements for your application, and then worry about CRI after. Are you looking to replicate the light of natural daylight? Pick a high color temperature value (5000K or higher), and then CRI value next. A 2700K light bulb with 95 CRI, even with a high CRI rating, will not come even close to replicating natural daylight due to its color temperature being way off.

Now, in your quest to replicate natural daylight, let’s say you find a 6500K bulb but with a low CRI. In this case, the color of light emitted by the bulb might look the same as natural daylight (due to the color temperature value), but as soon as the light lands on any surface with color, you will find that the colors do not appear the same as under natural daylight (due to the low CRI value).